AI Security Risks: Key Threats, Causes, and How to Prevent Them

Table of Contents

Artificial intelligence now powers critical digital infrastructures across healthcare, finance, transportation, and government sectors worldwide. These systems enhance operational efficiency while simultaneously introducing new cybersecurity capabilities to protect sensitive data networks. However, AI security risks have emerged as significant concerns for organizations implementing these technologies at scale. The integration of AI creates novel vulnerabilities that traditional security approaches cannot adequately address. AI security risks require specialized understanding and mitigation strategies to protect against increasingly sophisticated threats. This comprehensive guide explores the most pressing AI security concerns, practical solutions for addressing these vulnerabilities, and future developments in the AI security landscape.

Table of Contents

AI Security Risks in 2025: A Practical Overview

The AI threat landscape continues to evolve rapidly as adoption accelerates across industries and applications. Attackers increasingly leverage AI technologies to automate reconnaissance, vulnerability discovery, and exploit development at unprecedented scale. These adversaries can now customize attacks based on organizational profiles with minimal human intervention. Meanwhile, poorly secured AI systems themselves have become prime targets for exploitation due to their access to sensitive data. Machine learning models often contain vulnerabilities that allow manipulation through adversarial inputs or extraction of training data.

The concept of dual-use technology applies strongly to AI systems that serve beneficial purposes but enable harmful activities. Facial recognition systems designed for security can enable mass surveillance when deployed without proper safeguards. Natural language processing tools that summarize content may inadvertently reveal confidential information through prompt manipulation techniques. Voice synthesis technologies create new possibilities for sophisticated social engineering attacks that bypass traditional security controls.

The year 2025 presents unique challenges due to democratized access to powerful AI capabilities previously limited to specialized research teams. Open-source models with billions of parameters now run on consumer hardware with minimal technical expertise required. This accessibility expands the potential attack surface exponentially as organizations implement AI without sufficient security controls. Smaller organizations without dedicated security teams face particular challenges in securing their AI implementations against increasingly sophisticated threats.

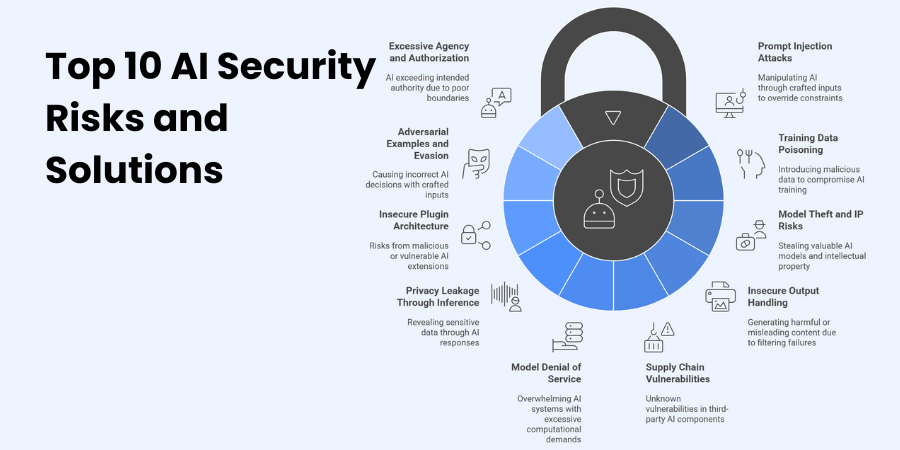

Top 10 AI Security Risks and Solutions

1. Prompt Injection Attacks

Adversaries can manipulate AI systems through carefully crafted inputs that override intended constraints or extract sensitive information. These attacks exploit the fundamental mechanisms by which language models process instructions and generate responses. Organizations must implement robust input validation, rate limiting, and prompt engineering techniques to mitigate these risks. Creating security boundaries between user inputs and system instructions provides essential protection against these increasingly common attacks. Regular penetration testing specifically targeting prompt manipulation scenarios helps identify vulnerabilities before exploitation occurs.

2. Training Data Poisoning

Malicious actors can compromise AI systems by introducing manipulated data during the training process to create hidden vulnerabilities. This poisoning can create backdoors, bias model outputs, or degrade performance in targeted scenarios without obvious detection. Organizations should implement rigorous data validation processes and maintain comprehensive audit trails for all training datasets. Differential privacy techniques can protect against inference attacks while preserving model utility for legitimate applications. Regular anomaly detection during training helps identify potential poisoning attempts before models enter production environments.

3. Model Theft and Intellectual Property Risks

Valuable AI models represent significant intellectual property that competitors or criminals may attempt to steal through various techniques. Extraction attacks can reconstruct model functionality through systematic querying of public-facing interfaces without authorized access. Organizations should implement:

- Strict access controls

- API rate limiting

- Output randomization techniques

- Watermarking model outputs

- Monitoring for unusual query patterns

These measures help identify stolen intellectual property and detect potential extraction attempts before complete model theft occurs.

4. Insecure Output Handling

AI systems may generate harmful, biased, or misleading content when output filtering mechanisms fail to catch problematic responses. These failures create legal, reputational, and security risks for organizations deploying generative AI technologies in customer-facing applications. Implementing robust content filtering, human review processes, and output sandboxing provides essential protection against these risks. Organizations should establish clear policies regarding acceptable AI outputs and implement technical controls enforcing these boundaries. Regular red team exercises help identify potential output manipulation vulnerabilities before public exposure occurs.

5. Supply Chain Vulnerabilities

Pre-trained models and third-party AI components may contain unknown vulnerabilities or backdoors inserted during development without detection. Organizations often lack visibility into the security practices of their AI component suppliers and integration partners. Implementing comprehensive vendor security assessments and contractual security requirements helps mitigate these increasingly common risks. Organizations should maintain accurate inventories of all AI components and their origins throughout the development lifecycle. Regular security scanning of third-party models before integration into production systems provides essential protection.

6. Model Denial of Service

Attackers can overwhelm AI systems through specially crafted inputs that consume excessive computational resources and degrade service availability. These attacks exploit the variable processing requirements of complex inputs to create system slowdowns or outages. Organizations should protect against these attacks through:

- Resource consumption limits

- Request prioritization

- Computational budgeting

- Monitoring for unusual processing patterns

- Load testing with adversarial inputs

These measures help identify potential denial of service vulnerabilities before production deployment causes service disruptions.

7. Privacy Leakage Through Inference

AI systems may inadvertently reveal sensitive information about their training data through responses to carefully crafted queries. These membership inference attacks can expose confidential data used during model development without direct database access. Implementing differential privacy techniques, output randomization, and query filtering helps protect against these sophisticated vulnerabilities. Organizations should conduct regular privacy audits to identify potential information leakage through model outputs. Limiting the precision of model outputs reduces the risk of sensitive data extraction through inference attacks.

8. Insecure Plugin Architecture

AI systems with plugin capabilities face additional risks from malicious or vulnerable extensions that expand functionality without security controls. These plugins often receive elevated privileges within the AI environment without sufficient security validation or monitoring. Organizations should implement strict plugin validation, sandboxing, and permission management systems to prevent unauthorized actions. Regular security assessments of all plugins before integration helps prevent compromise through this expanding attack surface. Monitoring plugin behavior during operation can identify potentially malicious activities before significant damage occurs.

9. Adversarial Examples and Evasion Attacks

Specially crafted inputs can cause AI systems to make incorrect decisions or classifications while appearing normal to human observers. These adversarial examples exploit fundamental vulnerabilities in machine learning algorithms that process visual or textual information. Implementing adversarial training, input preprocessing, and ensemble methods improves model robustness against these sophisticated attacks. Organizations should regularly test systems with adversarial examples to identify potential vulnerabilities before exploitation. Maintaining human oversight for critical decisions provides an essential safety mechanism against manipulation attempts.

10. Excessive Agency and Authorization

AI systems may exceed their intended authority when authorization boundaries remain poorly defined or improperly enforced. This excessive agency creates significant security and compliance risks for organizations deploying autonomous systems. Implementing principle of least privilege, clear authorization boundaries, and continuous monitoring helps contain these emerging risks. Organizations should establish explicit policies regarding AI system capabilities and permissions throughout the operational lifecycle. Regular authorization reviews ensure AI systems maintain appropriate access levels as requirements evolve.

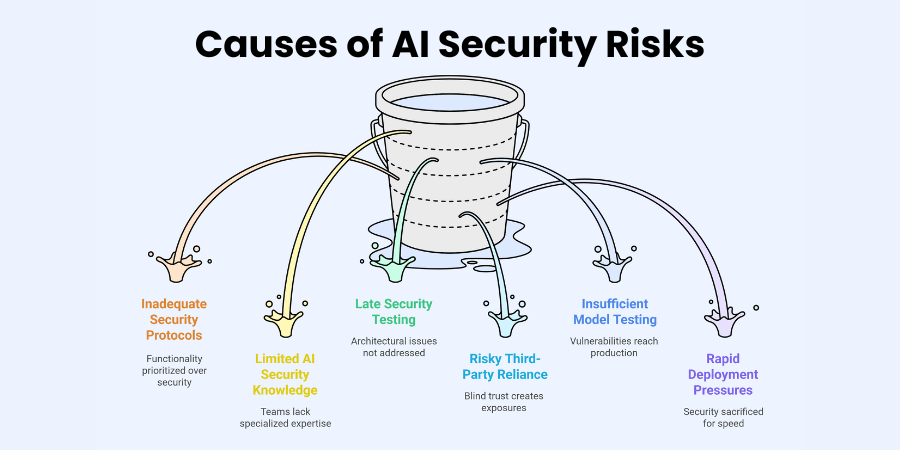

Causes of AI Security Risks

Insufficient security protocols during the AI development lifecycle represent a primary cause of vulnerabilities in deployed systems. Many organizations prioritize functionality and performance over security considerations during model development and deployment phases. Development teams often lack specialized knowledge regarding AI-specific security vulnerabilities and mitigation strategies for machine learning systems. This knowledge gap leads to implementations that fail to address fundamental security requirements for complex AI architectures. Security testing frequently occurs too late in the development process to address architectural vulnerabilities effectively.

Reliance on third-party datasets and tools introduces additional risks when organizations lack visibility into their security practices. Pre-trained models may contain vulnerabilities, backdoors, or biases inherited from their original training environments without clear documentation. Organizations frequently implement these components without sufficient security validation or understanding of their internal mechanisms. This blind trust creates significant security exposures that remain difficult to detect through conventional security testing approaches. Comprehensive supply chain security requires specialized techniques specifically designed for AI components.

Insufficient model testing before deployment allows vulnerabilities to reach production environments where they face active exploitation attempts. Many organizations lack testing frameworks specifically designed to identify AI-specific security issues like prompt injection vulnerabilities. Testing often focuses on functional requirements rather than adversarial scenarios that malicious actors might exploit in real-world situations. This limited testing scope leaves critical vulnerabilities undetected until after deployment exposes systems to attacks. Comprehensive security testing requires specialized expertise in AI vulnerability assessment techniques.

Rapid deployment without thorough security audits creates additional risks as organizations rush to implement AI capabilities. Competitive pressures drive accelerated deployment timelines that often sacrifice security considerations for market advantage. Security teams frequently lack sufficient time to conduct comprehensive assessments before production release dates arrive. This rushed approach prevents proper implementation of security controls specifically designed for AI systems. Organizations must balance deployment speed with appropriate security validation to prevent serious vulnerabilities.

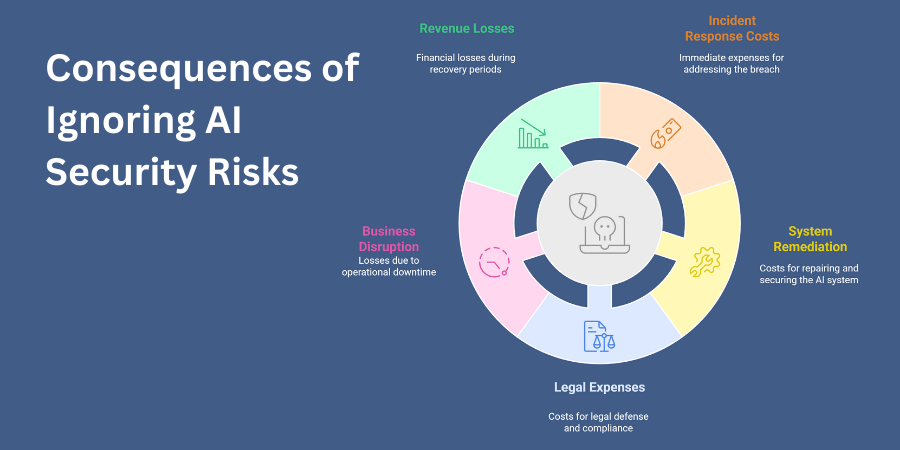

Consequences of Ignoring AI Security Risks

Financial losses from AI security breaches can reach catastrophic levels due to the critical nature of systems now employing artificial intelligence. The consequences include:

- Remediation costs averaging millions of dollars per incident

- Immediate incident response expenses

- System remediation requirements

- Legal expenses and regulatory penalties

- Business disruption and lost productivity

- Revenue losses during recovery periods

Investment in preventative security measures typically costs significantly less than breach recovery expenses across most industries.

Privacy breaches resulting from AI security failures create substantial legal and regulatory exposure for affected organizations. Modern privacy regulations impose severe penalties for unauthorized data exposure, reaching up to 4% of global annual revenue. AI systems often process highly sensitive personal information that requires stringent protection under various regulatory frameworks. The ability of AI systems to infer sensitive attributes from seemingly innocuous data creates additional privacy concerns. Organizations must implement comprehensive privacy controls specifically designed for AI applications.

Reputational damage following AI security incidents can persist long after technical remediation completes and systems return to normal. Public perception of AI already includes significant concerns regarding privacy, bias, and security implications for individuals. Security failures reinforce these negative perceptions and erode trust in organizations deploying AI technologies for critical functions. Rebuilding customer and partner confidence after significant AI security incidents requires substantial time and resources. Proactive security measures help preserve organizational reputation and stakeholder trust throughout AI adoption.

Public safety threats emerge when AI systems controlling critical infrastructure or physical systems experience security compromises. Autonomous vehicles, medical devices, and industrial control systems increasingly incorporate AI components vulnerable to security attacks. Manipulation of these systems could potentially cause physical harm to individuals or communities relying on their proper function. The interconnected nature of modern infrastructure amplifies these risks through cascading failures across multiple systems. Organizations must implement defense-in-depth strategies appropriate for safety-critical AI applications.

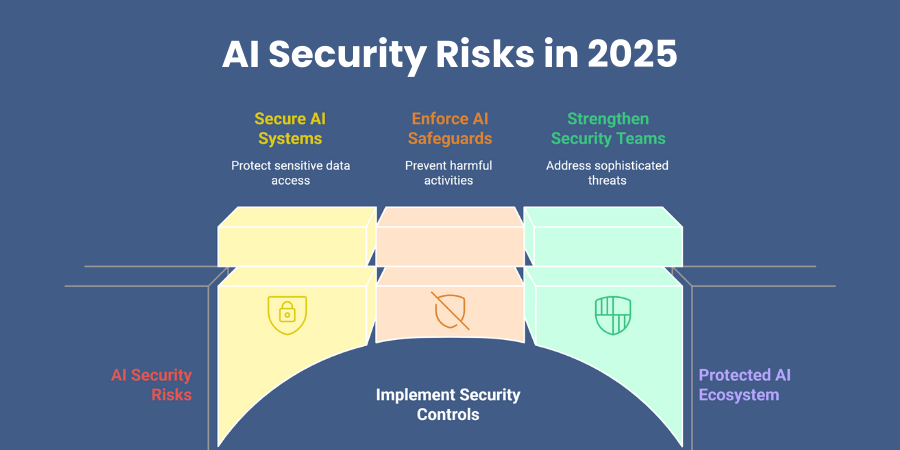

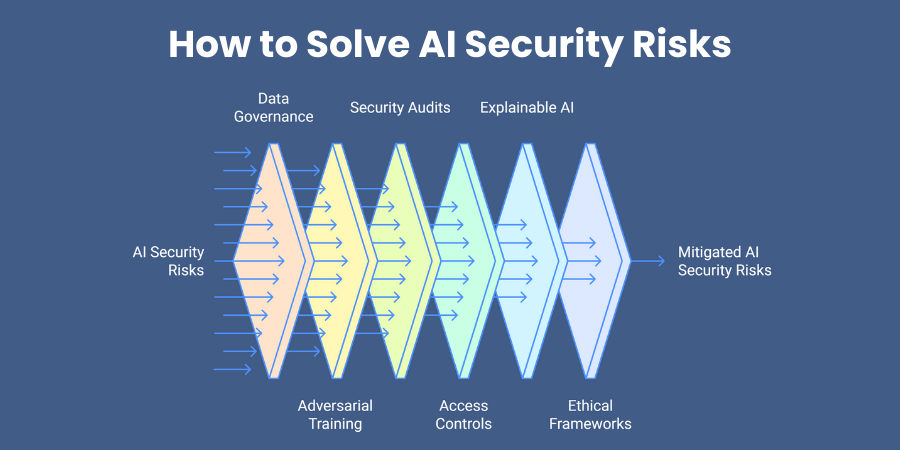

How to Solve AI Security Risks

Addressing AI security risks requires a proactive, multi-layered approach that combines technical safeguards, ethical design, and continuous monitoring. Since AI systems are vulnerable at every stage — from data collection to deployment — solutions should focus on securing data, hardening models, and controlling access. Below are key strategies to effectively mitigate these risks.

1. Implement Robust Data Governance

Ensure data integrity by using only trusted, verified datasets. Regularly clean and validate data to prevent data poisoning attacks. Limit the use of publicly scraped or unverified third-party datasets.

2. Use Adversarial Training

Train AI models with adversarial examples — intentionally altered inputs — to make them resilient against manipulation. This helps models learn to recognize and ignore malicious patterns.

3. Conduct Regular Security Audits

Perform frequent AI model audits to detect vulnerabilities early. This should include penetration testing, code reviews, and monitoring for suspicious activity in deployed systems.

4. Apply Strong Access Controls

Restrict who can view, modify, or export AI models. Use authentication, encryption, and role-based access to prevent unauthorized tampering or theft.

5. Integrate Explainable AI (XAI)

Design AI systems with transparency features so that decisions can be understood and verified. This helps in detecting unusual or manipulated model behaviors.

6. Establish Ethical AI Frameworks

Adopt clear ethical guidelines for AI development, focusing on fairness, bias reduction, and responsible use. Include human oversight in critical decision-making areas.

Table: Solutions to AI Security Risks

| Security Risk | Solution | Impact |

| Data Poisoning | Use trusted datasets, apply data validation checks | Prevents malicious data from corrupting AI models |

| Adversarial Attacks | Implement adversarial training and model hardening | Increases resistance to manipulation attempts |

| Model Inversion | Encrypt sensitive training data and limit model queries | Protects privacy and sensitive information |

| Model Theft | Apply access controls and watermark AI models | Prevents intellectual property loss |

| Bias Exploitation | Use diverse training data and bias detection tools | Reduces manipulation through systemic bias |

| API & Integration Vulnerability | Secure APIs with authentication and monitor traffic | Blocks unauthorized access to AI functionalities |

The Future of AI and Cybersecurity

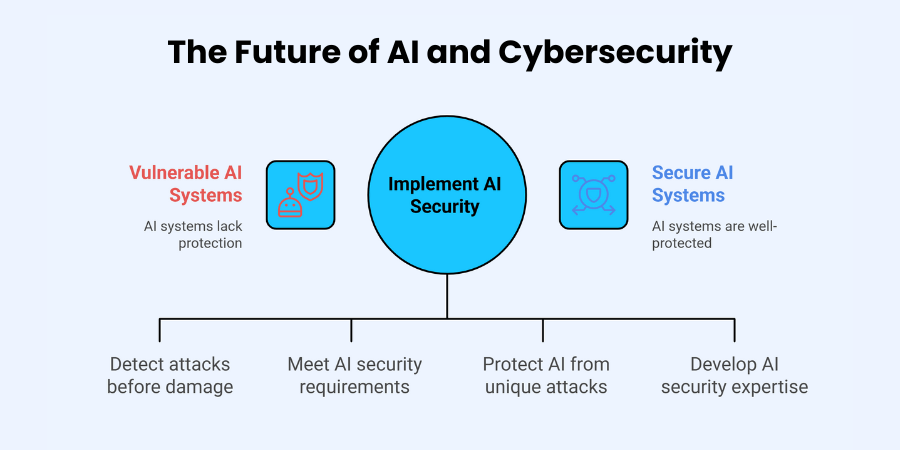

The relationship between AI and cybersecurity continues evolving toward increasingly sophisticated defensive capabilities and threats. Security platforms now incorporate machine learning to detect anomalous patterns indicating potential attacks before significant damage occurs. These systems analyze vast quantities of security telemetry that would overwhelm human analysts working without AI assistance. Defensive AI capabilities will continue advancing toward autonomous security systems capable of identifying and responding to threats without human intervention. This automation becomes increasingly necessary as attack speeds exceed human response capabilities.

Regulatory frameworks specifically addressing AI security requirements continue emerging across global jurisdictions with increasing technical specificity. The European Union’s AI Act establishes comprehensive requirements for high-risk AI systems including robust security controls. Similar regulations appear in other regions as governments recognize the critical importance of AI security standards. Organizations must prepare for increasing compliance requirements specific to AI systems across global markets. These regulations will likely mandate security testing, documentation, and ongoing monitoring for AI deployments.

AI-specific security platforms continue emerging to address the unique challenges of protecting machine learning systems from specialized attacks. These specialized tools provide capabilities beyond traditional security solutions that cannot adequately protect AI components. Vulnerability scanning specifically designed for machine learning models helps identify previously undetectable security issues before exploitation. Runtime protection systems monitor AI behavior for signs of manipulation or compromise during operational use. Organizations should evaluate these specialized solutions as part of comprehensive AI security strategies.

The demand for AI-literate security professionals continues growing as organizations recognize the specialized knowledge required for effective protection. Traditional security training rarely covers the unique vulnerabilities and protection mechanisms for AI systems. Universities and professional organizations now develop specialized AI security curricula to address this knowledge gap. Organizations must invest in training existing security teams on AI-specific threats and controls. Cross-functional collaboration between security and data science teams becomes increasingly important for effective AI protection.

結論

AI security risks present significant challenges that organizations must address through comprehensive security strategies and specialized controls. The integration of artificial intelligence into critical systems creates novel vulnerabilities requiring new approaches to security beyond traditional methods. Organizations must implement robust security practices throughout the AI development lifecycle from initial design through deployment and monitoring. Regular security assessments specifically targeting AI vulnerabilities help identify potential issues before exploitation occurs.

Responsible AI practices, including thorough risk assessments and security testing, provide essential protection against emerging threats targeting machine learning systems. Organizations should establish clear governance frameworks defining security requirements for all AI implementations across their technology portfolio. These frameworks must address the unique characteristics of machine learning systems that traditional security approaches cannot adequately protect. Staying informed about evolving threats and mitigation strategies helps organizations maintain appropriate security postures.

Organizations must invest in secure AI systems to protect their operations, reputation, and stakeholders from increasingly sophisticated attacks. This investment includes specialized tools, training, and processes specifically designed for AI security throughout the system lifecycle. The consequences of security failures continue growing as AI systems gain additional capabilities and access to sensitive functions. Proactive security measures cost significantly less than responding to serious security incidents after they occur.

よくある質問

What are the most common AI security attacks?

The most common AI security attacks include prompt injection, training data poisoning, and model extraction attempts targeting deployed systems. Adversarial examples that manipulate model outputs also represent significant threats to AI systems processing visual data. Organizations frequently encounter privacy attacks attempting to extract sensitive information from training data through inference techniques. Implementation of comprehensive security controls specifically designed for AI helps mitigate these common attack vectors.

How can organizations protect against AI security risks?

Organizations can protect against AI security risks through comprehensive security programs specifically addressing machine learning vulnerabilities throughout development. Implementation of secure development practices throughout the AI lifecycle provides fundamental protection against many common attack vectors. Regular security testing using specialized tools helps identify vulnerabilities before exploitation by malicious actors. Maintaining security awareness regarding emerging threats ensures appropriate evolution of defensive measures as attack techniques advance.

What skills are needed for AI security professionals?

AI security professionals need a combination of traditional cybersecurity knowledge and specialized understanding of machine learning systems. Understanding model architectures, training processes, and inference mechanisms provides essential context for security analysis and vulnerability assessment. Knowledge of adversarial machine learning techniques helps identify potential vulnerabilities during security assessments of AI systems. Programming skills, particularly in Python, enable effective analysis and testing of AI components.

How will AI security evolve in the next five years?

AI security will evolve toward greater automation and specialized protection mechanisms over the next five years. Regulatory requirements specifically addressing AI security will become more comprehensive and widespread across global markets. Security tools designed specifically for machine learning systems will continue maturing with enhanced capabilities for vulnerability detection. Organizations will increasingly incorporate AI security considerations into their broader risk management and governance frameworks.

Shaif Azad

Related Post

エンタープライズ向け生成AI:活用事例、課題、ベストプラクティス

Are you wondering how your organization can stay competitive in today’s rapidly evolving business landscape? The...

会話型AIによるビジネスROIの可視化

Are you considering conversational AI for your business but questioning whether the investment will deliver real...

チャットボットが企業のコスト削減と迅速なスケール拡大を実現する方法

Are you lying awake wondering how your business can cut operational costs without sacrificing quality? Have...